/var/log Disk Space Issues | Ubuntu, Kali, Debian Linux | /var/log Fills Up Fast

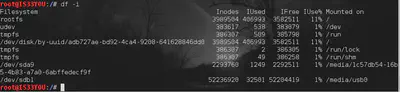

Recently, I started noticing that my computer keeps running out of space for no reason at all. I mean I didn’t download any large files and my root drive should not be having any space issues, and yet my computer kept telling me that I had ‘0’ bytes available or free on my /root/ drive. As I found it hard to believe, I invoked the ‘df’ command (for disk space usage): df

So clearly, 100% of the disk partition is in use, and ‘0’ is available to me. Again, I tried to see if the system simply ran out of ‘inodes’ to assign to new files; this could happen if there are a lot of small files of ‘0’ bytes or so on your machine.

df -i

Only 11% inodes were in use, so this was clearly not a problem of running out of inodes. This was completely baffling. First thing to do was to locate the cause of the problem. Computers never lie. If the machine tells me that I am running out of space on the root drive then there must be some files that I do not know about, mostly likely these are some ‘system’ files created during routine operations.

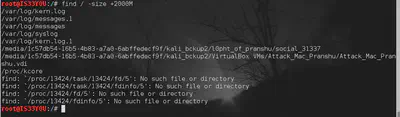

To locate the cause of the problem, I executed the following command to find all files of size greater than ~2GB:

find / -size +2000M

Clearly, the folder /var/log needs my attention. Seems like some kernel log files are humongous in size and have not been ‘rotated’ (explained later). So, I listed the contents of this directory arranged in order of decreasing size:

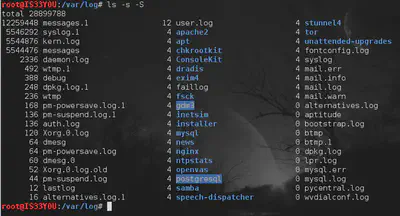

ls -s -S

That one log file ‘messages.1’ was 12 GB in size and the next two were 5.5 GB. So this is what has been eating up my space. First thing I did, was run ’logrotate’:

/etc/cron.daily/logrotate

It ran for a while as it rotated the logs. logrotate is meant to automate the task of administrating log files on systems that generate a heavy amount of logs. It is responsible for compressing, rotating, and delivering log files. Read more about it here.

What I hoped by running logrotate was that it would rotate and compress the old log files so I can quickly remove those from my system. Why didn’t I just delete that ‘/var/log’ directory directly? Because that would break things. ‘/var/log’ is needed by the system and the system expects to see it. Deleting it is a bad idea. So, I needed to ensure that I don’t delete anything of significance.

After a while, logrotate completed execution and I was able to see some .gz compresses files in this directory. I quickly removed (or deleted) these.

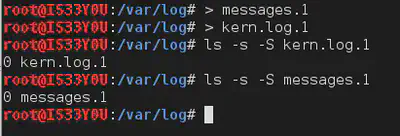

Still, there were two files of around 5 GB: messages.1 and kern.log.1. Since these had already been rotated, I figured it would be safe to remove these as well. But instead of doing an rm to remove them, I decided to just empty them (in case they were being used somewhere).

messages.1

kern.log.1

The size of both of these was reduced to ‘0’ bytes. Great! Freed up a lot of disk space this way and nothing ‘broken’ in the process.

How did the log files become so large over such a small time period?

This is killing me. Normally, log files should not reach this kind of sizes if logrotate is doing its job properly or if everything is running right. I am still interested in knowing how did the log files got so huge in the first place. It is probably some service, application or process creating a lot of errors maybe? Maybe logrotate is not able to execute under ‘cron’ jobs? I don’t know. Before ’emptying’ these log files I did take a look inside them to find repetitive patterns. But then I quickly gave up on reading 5 GB files as I was short on time.

Since this is my personal laptop that I shut down at night, as opposed to a server that is up all the time, I have installed ‘anacron’ and will set ’logrotate’ to run under ‘anacron’ instead of cron. I did this since I have my suspicions that cron is not executing logrotate daily. We will see what the results are.

I will update this post when I have discovered the root cause of this problem.